HDMI has long been the go-to standard for displaying video on a screen – but you might not be aware of what HDMI is. The standard can be found on TVs, computers, gaming consoles, A/V receivers, and so on – and as a result, many people are wondering, just what is HDMI? In short, HDMI is a standard for digitally transmitting uncompressed video and audio data, and was originally built to replace analog standards before it.

What is HDMI? Here’s everything you need to know.

A history of HDMI

The early days of HDMI actually date all the way back to 2002, when the founders of the technology first started working on the first iteration of it – HDMI 1.0. At the time, the idea was to create a new standard that would be backward compatible with DVI, which is a video-only standard that could be found on many monitors at the time.

Those founders weren’t exactly unknown inventors. Instead, they were a group of international companies that wanted to develop a new standard for the next few decades of video and audio transfer. Included in the original founders were the likes of Panasonic, Philips, Sony, Toshiba, RCA, and more, but the tech also had the support of content providers like Disney, Warner Bros. Universal, and more.

There were other goals for HDMI too. For example, the founders wanted the tech to be relatively small and add audio compatibility – making it easier to use for consumers. Soon after developing the standard, the first HDMI Authorized Testing Center was opening in 2003, followed soon by another center in Japan in 2004. Speaking of 2004, in that year, only a few years after the standard was first developed, a massive 5 million HDMI devices were sold.

What does HDMI mean?

You may well know what HDMI is, but what does HDMI mean? HDMI stands for High-Definition Multimedia Interface. It’s a bit of a mouth full. It makes sense, though. The standard is aimed at delivering high-resolution video and audio (or multimedia).

Of course, HDMI is often followed by a number – or the version number. Like many other standards, HDMI has been iterated upon over the years, and the higher the number, the more recent the version of HDMI.

HDMI features: What are the benefits of HDMI?

There are a number of advantages to using HDMI over other standards. HDMI is able to transport both video and audio, and that gives it a clear advantage over older standards that could only transport one or the other. The result? For the average consumer, who only wants to connect their gaming console to their TV or something similar, they only need one cable.

HDMI has gotten even more versatile in recent iterations. For example, the HDMI standard can now also transfer 3D data and Ethernet data – all in a single cable, which is one of the major benefits of HDMI. That makes setting up a home theater system a whole lot easier than it otherwise would be.

It’s important to note that HDMI is a data transfer standard – and it has different connectors. For example, there’s also the Mini HDMI and Micro HDMI connectors, which are sometimes used in devices like camcorders, laptops, or tablets.

What is HDMI 2.0?

As mentioned, since being first invented in 2002, HDMI has been iterated upon – and newer versions offer some clear advantages. Here’s a rundown of the different versions of HDMI and the features that they brought.

HDMI 1.0 was officially released on December 9, 2002, and was able to transfer a digital video signal and up to 8 channels of audio. Essentially, DVI’s transmission standard was utilized and audio support was added. The standard was able to transmit data at up to 4.95Gb per second – which meant you could transfer video at up to a resolution of 1080p and a framerate of 60 frames per second.

HDMI 1.1 was launched in May 2004 and added support for DVD-Audio, as well as a few small tweaks to the electrical specification.

HDMI 1.2 was the next version of the HDMI standard and was introduced in August 2005. While only a few months after HDMI 1.1, HDMI 1.2 brought a number of major changes to the standard, including adding much better support for PCs by supporting low-voltage sources like PCI Express.

HDMI 1.3 was released in June 2006 and increased the bandwidth of a single HDMI link to a hefty 10.2Gbps. HDMI 1.3 also added support for 10-bit, 12-bit, and 16-bit color resolution per channel, and it introduced the new HDMI Type C, or mini-HDMI, connector.

HDMI 1.4 launched in May 2009 and added a number of extra features that made the connector a lot more versatile. For example, it added the HDMI Ethernet Channel for sharing an internet connection. Perhaps even better was the added support for 4K resolutions at 30Hz. HDMI 1.4 also added an audio return channel, or HDMI ARC (which we’ll get into later). This allowed a TV to send data to a surround sound system and helped eliminate the need for a separate audio cable in higher-end systems.

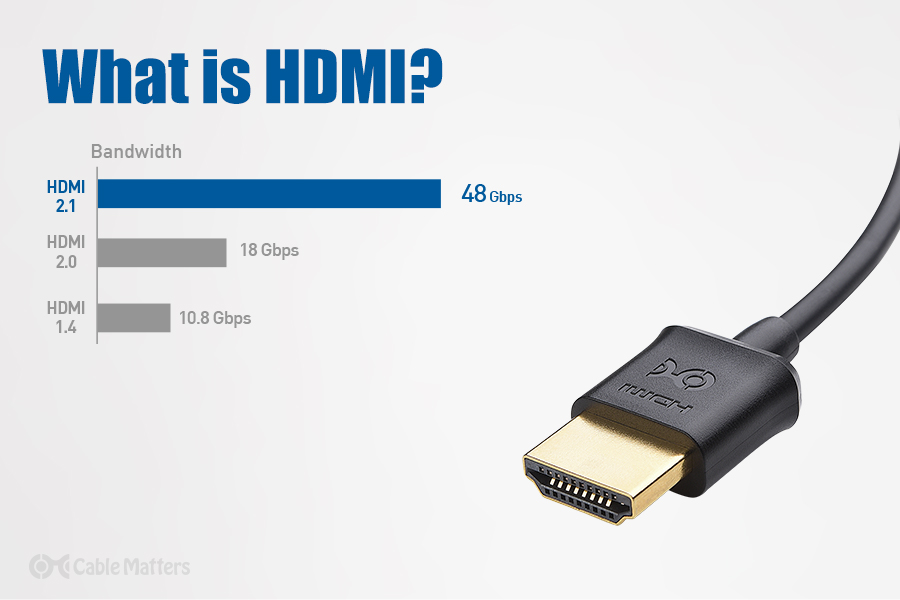

HDMI 2.0 was the first major version change since HDMI’s release – however, you could hardly argue that HDMI was close to the original version. HDMI 2.0 was released in September 2013, and increased the bandwidth to 18Gbps, thus supporting a 60Hz refresh rate at 4K resolutions. HDMI 2.0 also added support for up to 32 audio channels. HDMI 2.0a was released in 2015, adding support for HDR tech like HDR 10.

The addition of HDR is pretty important for HDMI. HDR, or High Dynamic Range, essentially allows for brighter highlights, more vivid, natural colors, and more – so with HDR, an image looks more detailed overall. To make use of this HDMI feature, a number of things have to fall into place. First, you need a TV that supports HDR, and has a connection, like HDMI 2.0, that also supports it. The actual content needs to be optimized for HDR too – and thankfully more and more content from the likes of Netflix come with HDR support. Lots of gaming consoles are adding support for HDR too – so you can enjoy those more vivid, punchier images while gaming. The PlayStation 4, and Xbox One S and One X all support HDR – though keep in mind that content has to support HDR too, so the actual game will need to be built to take advantage of it.

HDMI 2.1 is the latest and greatest version of HDMI, and it was released in November 2017. This version supports 48Gbps bandwidth and a massive 10K resolution at 120Hz. This version of HDMI will take a few years to be widely adopted, partially because it actually requires a new HDMI cable unlike the older improvements, and partially because there won’t be any content or displays to support it for some time. However, some companies like Cable Matters put out the newest standard HDMI 2.1 cables in an effort to get ahead of the game.

What is HDMI ARC?

Many home theater setups don’t use the TV’s speakers – and instead, make use of a separate speaker system for improved audio. Only one problem: If your smart TV is generating the content by streaming Netflix or Spotify, for example, or with the built-in tuner, how are you supposed to use that audio setup without a separate cable? HDMI ARC solves that issue.

Now, you can have an HDMI cable between an audio/video receiver and your TV and still use the speakers connected to that receiver, even when playing content directly from the TV.

HDMI ARC also comes in handy if you have an external soundbar. For example, you can connect all your gaming consoles, streaming devices, and so on, to the TV directly, then connect the HDMI ARC port to a sound bar, allowing you to hear high-quality audio through the soundbar when using all your devices.

HDMI ARC isn’t as widely supported as it should be. While many TV manufacturers have HDMI ARC ports on their TVs, sometimes those ports don’t support 5.1 surround – so if you’re having trouble implementing surround sound on your setup through an HDMI ARC port, that might be why.

Is DisplayPort better than HDMI?

HDMI isn’t the only standard aimed at delivering video and audio channels in one connector. DisplayPort is another standard. But is DisplayPort better than HDMI? Well, that depends.

DisplayPort is currently up to DisplayPort 1.4, which supports up to an 8K resolution at 60Hz, with HDR. DisplayPort is much more commonly used on computer monitors and computers than in TVs and other consumer devices, and there’s a good reason for that – it supports tech like AMD’s FreeSync and Nvidia’s G-Sync, which helps create a tear-free gaming experience. Unfortunately, few manufacturers are using DisplayPort 1.4 – and many are stuck at DisplayPort 1.2. If you only have access to an HDMI 2.0 port or a DisplayPort 1.2 port, as many monitors are limited to, then HDMI may be better thanks to the HDR support. That said, if your monitor has newer versions of DisplayPort and you’re a hardcore gamer, then using it over HDMI may be the way to go.

Can you convert DisplayPort and USB-C to HDMI?

Thankfully, you can convert most digital display signals to HDMI – making it easy to connect to an HDMI display through a DisplayPort on your laptop, for example. There are plenty of adapters to convert to HDMI, and they’re relatively inexpensive too. There’s a simple adapter to convert DisplayPort to HDMI, for example. Converting from USB-C and Thunderbolt 3 is also possible with a range of USB-C to HDMI adapters or cables. Converting to HDMI from a DVI source (or vice-versa) is easy to accomplish in a simple cable because the signals are electrically identical- it is essentially a physical connector change.

HDMI signals can also be converted. Notably, you can compress an HDMI signal to work over Ethernet, which makes it easier to transmit HDMI over long distances. That’s perfect for conference rooms, classrooms, or home theater setups that need to send a video signal up to 300 feet.